How Propaganda Spreads When Nobody Believes It

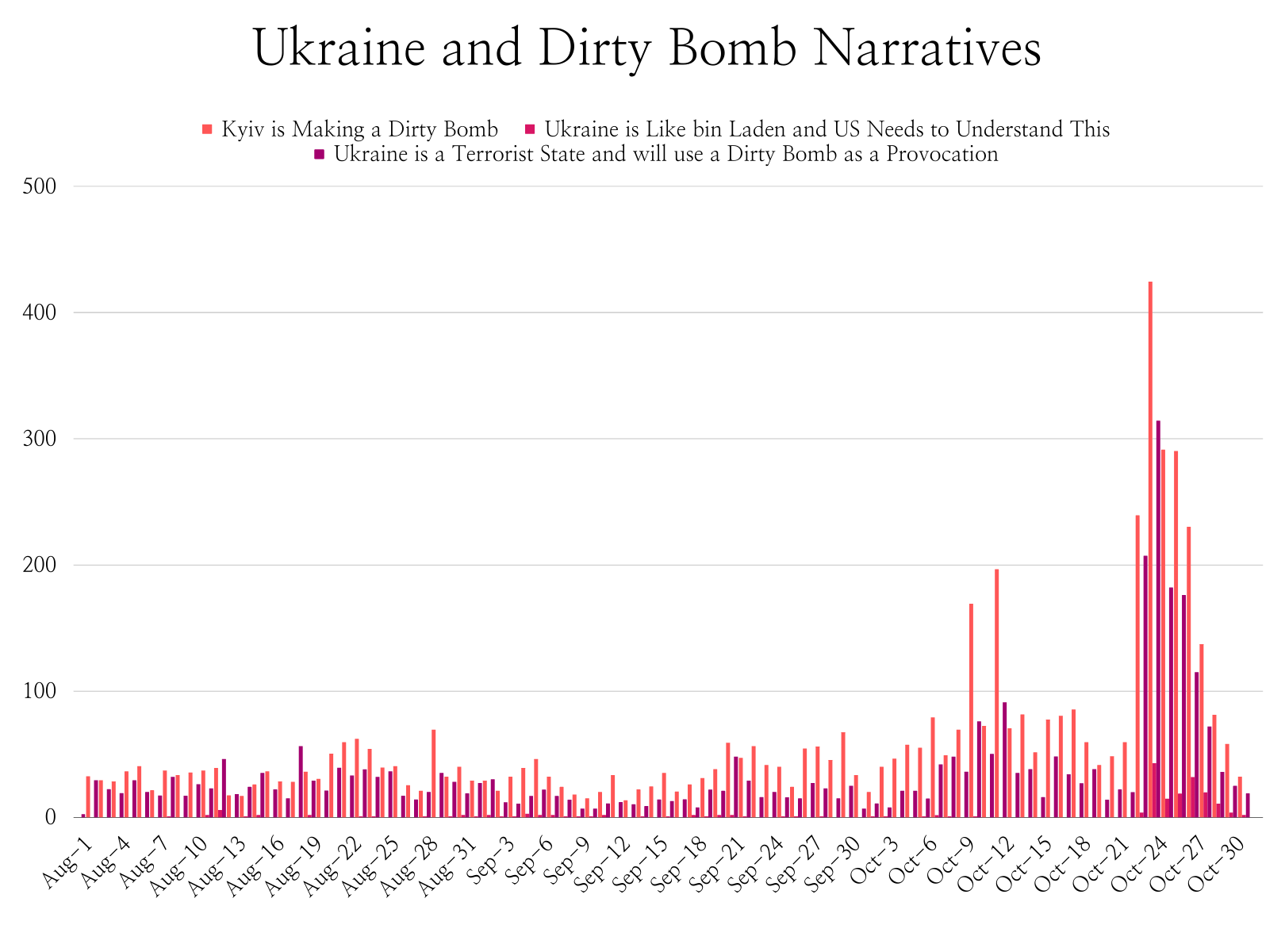

FilterLabs.AI was back in the news last week. A story in The New York Times quoted chief executive Jonathan Teubner on the subject of a new rumor swirling around Russian social media: an alleged Ukrainian plot to detonate a dirty bomb and blame the Russians.

“The sources of the narrative are mostly Kremlin-aligned sites,” Teubner told The Times. “But it is being repeated by some independent outlets who are attempting to refute it.”

Sure enough, news site after news site has reported on the rumor, with fact-checkers sputtering that of course the rumor is groundless and absurd.

Be that as it may, the rumor itself has an interesting backstory, which didn’t make it into the Times article. In following the birth and spread of the dirty bomb rumor, we can see something about the nature of today’s propaganda, why it’s so hard to fight, and what can be done about it.

Dirty Bomb Chatter

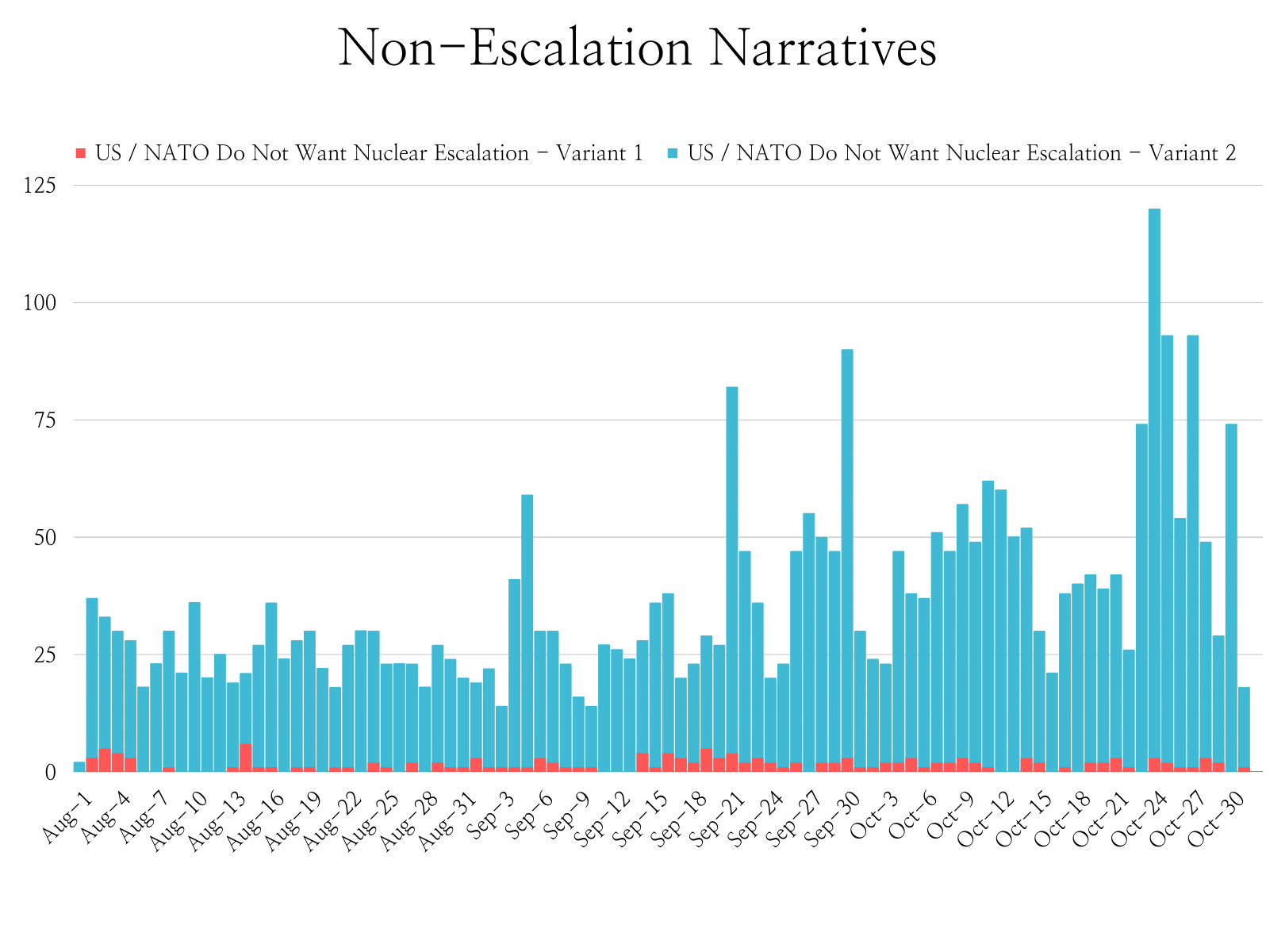

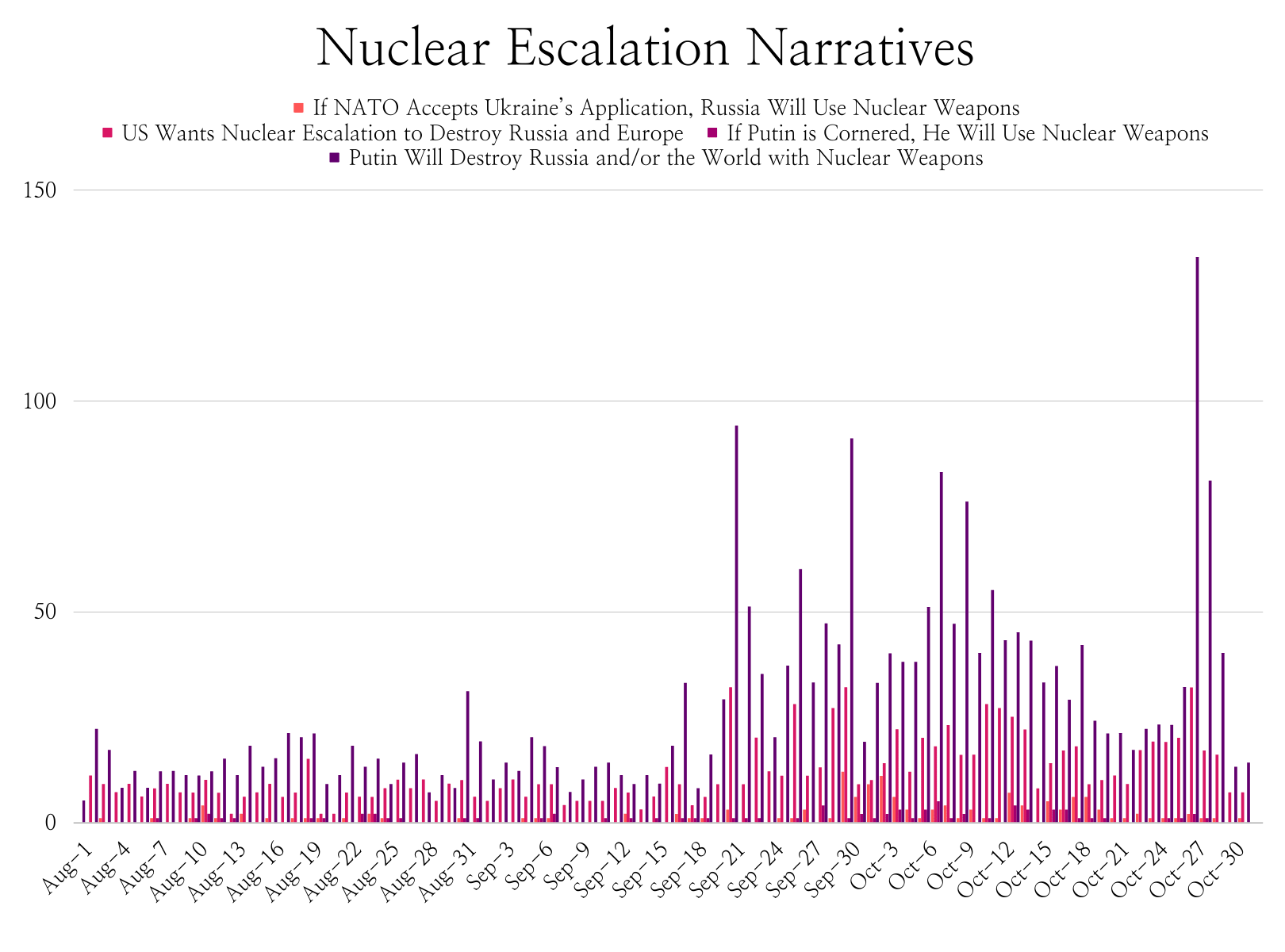

For months, FilterLabs has been tracking chatter about nuclear weapons on Russian popular forums, messaging apps, news sites, and social media channels. The rumor about the “dirty bomb” (a conventional explosive with radioactive materials attached) was only one of several nuclear narratives recurring in the chatter. FilterLabs identified five common ones:

- The US and NATO Do Not Want Nuclear Escalation.

- Ukraine wants an escalation, so they can blame Russia for it.

- If NATO accepts Ukraine’s application, Russia will use nuclear weapons.

- The US wants an escalation, because it will destroy both Russia and the European Union.

- Putin will use nuclear weapons to destroy Russia and/or the world, because he is a madman.

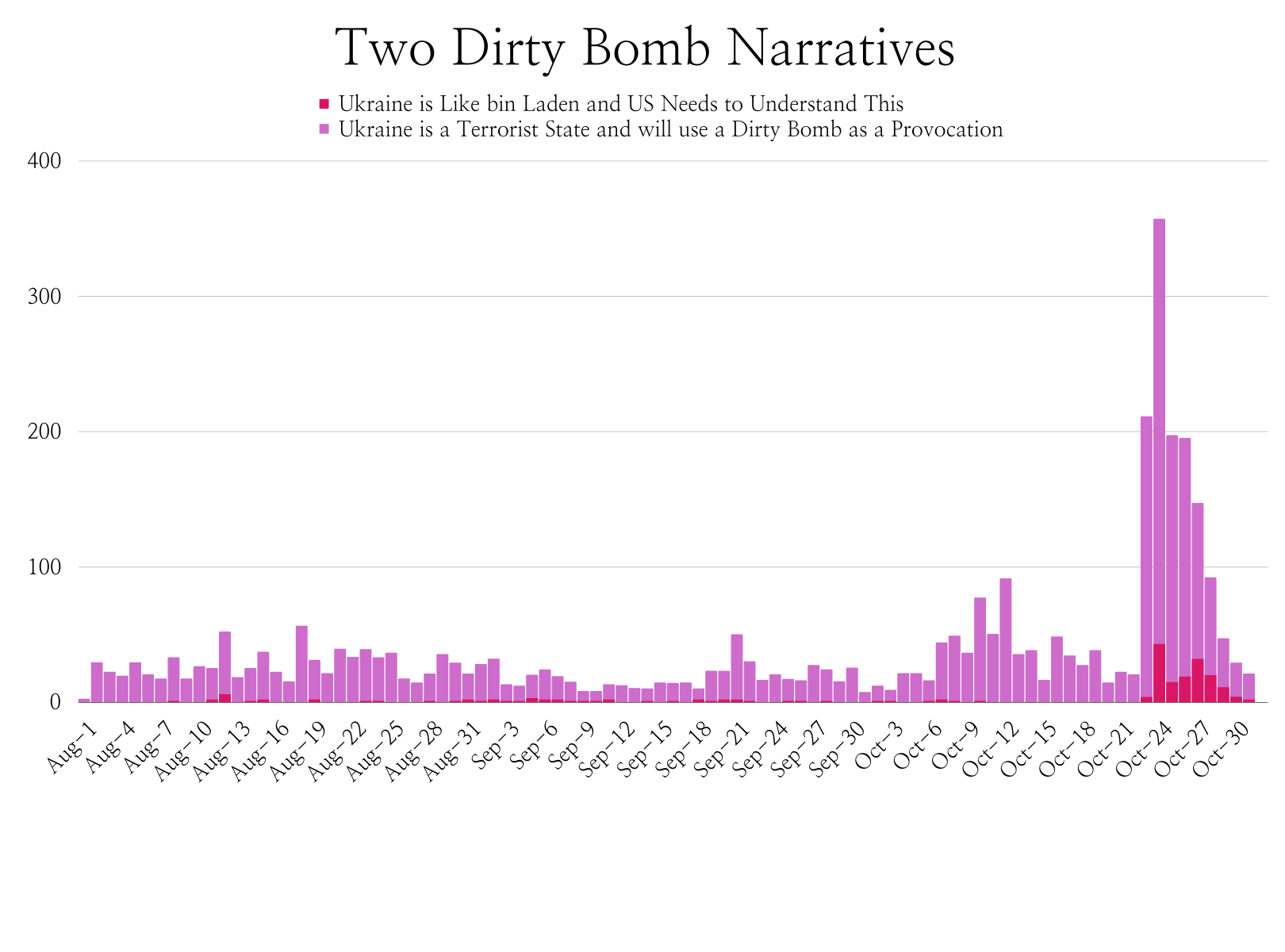

When FilterLabs started tracking these narratives over the summer, none were very widespread. But from the middle of September to the middle of October, narratives 1–4 began gathering steam.

Then, quite suddenly on October 23, multiple Russian news and social media sites started calling Ukraine a terrorist state and claiming that it would stage a dirty bomb attack and blame the Russians. Narrative 2 had taken off:

The Story of the Story

How did this story explode so quickly?

It happened in a few discrete stages. FilterLabs traced the dirty bomb rumor’s takeoff back to an October 21 article on the local news website e1.ru by journalist Vasilina Beriozkina that looked like a straight news story but never substantiated its most alarming claims with any specific evidence. The article, which describes the so-called “Ukrainian plot,” relied on anonymous sources (“media has reported…”) and careful hedging about “...alleged” plots for false flag operations.

In no time, other news sites for large Russian cities picked up the story and ran with it, often leaving the original hedging behind. What started as a rumor was now treated as a fact.

Then, on October 23, Russian Defense Minister Sergei Shoigu called his counterparts in the USA and elsewhere wanting to talk about the dirty bomb. Once a major political figure was peddling the narrative, it was news, and western outlets had little choice but to cover it, however skeptically. The dirty bomb plot had become an international issue.

Anatomy of a Propaganda Campaign

The successful spread of the dirty bomb rumor offers several insights into Russian disinformation campaigns, and into the dynamics of online propaganda.

First, modern propaganda does not need to emerge directly from governments. It seems likely that many popular and ostensibly independent Russian news sites like e1.ru are running stories at the Kremlin’s request. These seemingly independent sources are particularly helpful in the early days of a propaganda campaign. They give rumors the air of legitimacy.

Second, propaganda campaigns really take off when these thinly sourced local news stories become international news. Government action in this phase can make a big difference. A regime has the power to turn a rumor into a big news story merely by having its senior military or diplomatic figures repeat it.

These official statements put the western media in a real bind. Reporters and television producers may not believe the rumor, and may realize that by even discussing it they will lend it credibility; but if someone as important as the Russian minister of defense is repeating it with a straight face, that in itself becomes newsworthy. Western outlets repeatedly find themselves doing the next step of the Kremlin’s work for it, flooding the global media with stories about the rumor.

Is there another way?

Maybe. A first step might be to recognize and name something like Shoigu’s phone call as a pseudo-event. The term pseudo-event comes from the historian and one-time Librarian of Congress Daniel J. Boorstin. In his 1962 book The Image: A Guide to Pseudo-Events in America, Boorstin pointed out that many political events–everything from presidential debates to pardoning turkeys–only existed to be reported on.

Shoigu’s phone call was a classic pseudo-event. He was not describing anything that had happened, he was not announcing a new Russian initiative, and he certainly had no chance of convincing his interlocutors that what he was saying was true. That was all beside the point. His real objective was to create a scene, which would then be dutifully reported on. And it was.

The key to combating misinformation lies not only in fact-checking, but also in helping the public recognize pseudo-events and other propaganda tricks for what they are. It might help us better understand Russian propaganda—and perhaps it could also become a helpful heuristic in our own polarized political scene.

Read More: Russia insights from FilterLabs were also featured in this week’s New York Times story The Reality Behind Russia’s Talk About Nuclear Weapons.